by Yoann Jestin, of Ki3 Photonics (www.ki3photonics.com); and Len Zapalowski of Strategic Exits Partners Ltd. (www.exits.partners).

A survey of current articles on Quantum computing (QC) suggests that the time when utility-scale quantum computers will be available for commercial applications is at least a decade away. Companies and institutions are currently building quantum computers in the size range of tens to hundreds of qubits. Developers have aspirational goals of building quantum computers with thousands or even millions of qubits. To date, IBM has announced a quantum computer capable of solving (small-scale) quantum algorithms featuring 433 qubits1.

Puzzlingly, some companies have developed quantum optimization algorithms and application software for specific implementations like computational chemistry2. Some full stack companies have even demonstrated that quantum computers can outperform classical computers3 in specific applications. For example, supply chain problems can be optimized in minutes rather than hours4.

So, why do some industry experts believe that quantum commercialization is a decade away5 while some companies have achieved commercial successes already?6

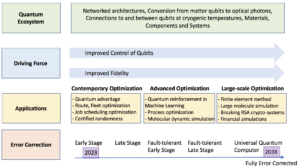

We address this apparent disconnect by using a model we have developed that maps the evolution of quantum computing from its beginnings through to the optimistic forecasts of current researchers. Figure 1 depicts the progression of Quantum Computing in both time (horizontal axis), and complexity (vertical axis). The horizontal axis shows the progression from the early stages of QC to the Universal Quantum Computer of the future. The vertical axis shows the four key pillars of QC development. The cells depict the application of QC for each pillar over the development stages.

At the early stage (today), developers are challenged to develop qubits with sufficient fidelity and robustness i.e. the degree to which a quantum state is preserved during a quantum operation or measurement, by using “error correction”7 techniques. Thus the early-stage quantum computers of 2023 are not yet fully optimized in terms of the fidelity and control of qubits through error correction techniques.

Figure 1: Development of Quantum Computing over Time and Complexity.

The Four Pillars

Below, we explore in more detail the four pillars of QC development which are being pursued in parallel:

Error Correction: In this section we depict the development stages of the level of error correction a quantum computer can support. Algorithms identify and fix errors due to noise or other anomalies that cause interference in a quantum hardware.

Applications: We envision the development of three types of applications which are being developed with the improved error correction rate of the qubits. Between early stage and late-stage error correction, quantum computers can have practical applications, such as certified randomness8 shown in the chart, while not necessarily outperforming classical computers. This phenomenon partially explains how quantum computers can have commercial value in advance of full development of the technology.

Driving Forces: This section relates to the continual progress needed to improve the quality and control of qubits. For example, deploying specific algorithms will enable more robust qubits to be developed9.

QC Ecosystem: We list the non-exhaustive goals of further development of the QC platform technology. These initiatives could lead to new architectures through networking, qubits with higher fidelity, or even new superconducting materials allowing for room temperature operation.

The Amazing Race

Recently, Quantum Computers have generated much media attention. Even the optimistic forecasts from 5-10 years ago did not predict the huge technological advances achieved today. For instance, a game-changing quantum advantage has recently been demonstrated in one application. In 2019 Google10 demonstrated QC performance in a task known as random circuit sampling11, with 53 qubits. QC solved the task in 200 seconds whereas it would take a supercomputer about 10,000 years, or 4.5 million times as long.

Classical algorithms/computers also continue to improve. In 2022 that same computation was done in a few hours with classical processors12. These sampling tasks conducted by Google demonstrated that computation could be successfully done when the QC error correction capability was still at an early stage.

Such excitement has fueled the debate of when a Universal Quantum Computer, i.e., using fully error corrected qubits, will be available. Universal Quantum Computers that will, for example, shorten the development of new vaccines are still a decade away. However, as depicted in Figure 1, the fidelity and control of qubits through error correction techniques is well underway as well as a burgeoning ecosystem. The community working on all aspects of the supply chain is growing quickly.

The Quantum Technology Landscape

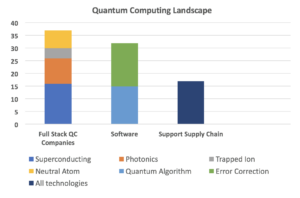

In Figure 2 we present an overview of the quantum computing landscape. These components are all essential for the development of the quantum computing ecosystem. We investigated a sample of full stack QC companies, QC software companies, and companies that are contributing to the quantum supply chain.

Figure 2: Quantum Computing Technology Landscape

From this we can see that there is no one technology that dominates the market. Hardware/full stack QC companies are also well-supported by software companies in the development of new algorithms and error correction techniques. Nevertheless, there is still much work to be done in building out the QC supply chain (e.g., cryogenics, chips), essential to shorten the development times of QC-related engineering problems.

Full stack/hardware companies are rooted in four main processor technologies which have the following advantages and disadvantages.

- Superconducting: The technology of choice of big companies like IBM, Google.

◦ Pros: Can provide high fidelity qubits.

◦ Cons: Requires cryogenic cooling. - Photonics: Companies like PsiQuantum, Xanadu are the leaders, and have significant funding.

◦ Pros: Small footprint, scalable.

◦ Cons: Challenges with photon losses which can lead to increased processing time and delays. - Trapped Ions: Led by companies like IonQ and AQT.

◦ Pros: High fidelity, long coherence time, no cryogenics.

◦ Cons: Ultra-high vacuum required, laser alignment. Harder to manage. - Neutral Atoms: Led by companies like Pasqual and QuEra.

◦ Pros: Efficient connectivity among qubits, no cryogenic, requires high vacuum.

Software companies are in two categories:

- Quantum Error Correction: Algorithms known to identify and fix errors in quantum computers13. Leading companies: Q-CTRL and other full stack companies.

- Quantum Algorithm: Software to perform a calculation on a quantum computer, have to use at least underlying superposition or entanglement14.

The Supply Chain still remains to be developed. It includes laser, cryogenic, testing companies etc.

The different technologies have their pros and cons. How they fare depends on how the industry evolves:

- Will we have a few very expensive computers placed around the world with cloud access to perform computations?

- Will we need thousands of quantum processors / computers to achieve fault tolerant computation?

Those questions will find answers in the future, and may depend on the type of application and/or algorithm we need to run on a machine.

Quantum Algorithms

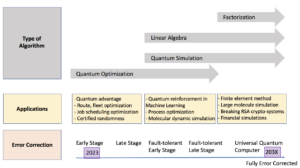

Critical to understanding the escalating capability of Quantum Computing is the role of quantum algorithms. Quantum algorithms are the quantum computing specific executable code that takes advantage of the unique properties of the underlying quantum architecture. Figure 3 replaces the Driving Forces and Quantum Ecosystem rows of Figure 1 with the Type of QC algorithm. The four key quantum algorithms:

1) Quantum Optimization

2) Quantum Simulation

3) Linear Algebra, and

4) Factorization

can be mapped to the Applications and Error Correction stages in Figure 3 below.

Figure 3: Parallel Between Development Time, Application and Type of Algorithm

Analysis

Our analysis suggests that:

- IBM dominates the race as it is involved in many different technologies, with work underway in both hardware and all aspects of software. However, most of the technologies are still at the R&D phase.

- We identified the next big players in the full stack according to the funding they have raised: e.g.: Pasqal, IonQ, Psi Quantum, Xanadu, Infleqtion, D-Wave, Rigetti, Quantinuum, IQM, Nord Quantique, etc.

- Claims of quantum advantage in current use cases versus classical computing have not yet been fully evaluated.

- There have been short term optimization successes based on limited problem sets. These are based on problems that scientists have addressed for decades.

Footnotes

- https://newsroom.ibm.com/2022-11-09-IBM-Unveils-400-Qubit-Plus-Quantum-Processor-and-Next-Generation-IBM-Quantum-System-Two

- https://www.mckinsey.com/industries/chemicals/our-insights/the-next-big-thing-quantum-computings-potential-impact-on-chemicals

- https://deadcatlivecat.com/articles/canadian-company-demonstrates-quantum-advantage-with-a-fully-programmable-photonics-based-device

- https://www.insidequantumtechnology.com/news-archive/quantum-computers-are-coming-pioneer-user-a-canadian-a-grocery-chain-save-on-foods/

- https://www.weforum.org/reports/state-of-quantum-computing-building-a-quantum-economy/

- https://www.nextbigfuture.com/2022/12/what-is-really-happening-with-quantum-computers.html

- https://q-ctrl.com/topics/what-is-quantum-error-correction

- https://www.quantamagazine.org/how-to-turn-a-quantum-computer-into-the-ultimate-randomness-generator-20190619/

- https://techxplore.com/news/2022-01-major-quantum-fidelity.html

- https://www.nature.com/articles/d41586-019-03213-z

- https://arxiv.org/pdf/2210.12753.pdf

- https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.129.090502

- https://q-ctrl.com/topics/what-is-quantum-error-correction

- https://arxiv.org/abs/1511.04206